KOMPAS.com - An AI arms race is already underway. That's the blunt warning from Germany's foreign minister, Heiko Maas.

"We're right in the middle of it. That's the reality we have to deal with," Maas told DW, speaking in a new DW documentary, "Future Wars — and How to Prevent Them." It's a reality at the heart of the struggle for supremacy between the world's greatest powers.

"This is a race that cuts across the military and the civilian fields," said Amandeep Singh Gill, former chair of the United Nations group of governmental experts on lethal autonomous weapons. "This is a multi-trillion dollar question."

Great powers pile in

This is apparent in a recent report from the United States' National Security Commission on Artificial Intelligence.

It speaks of a "new warfighting paradigm" pitting "algorithms against algorithms," and urges massive investments "to continuously out-innovate potential adversaries."

And you can see it in China's latest five-year plan, which places AI at the center of a relentless ramp-up in research and development, while the People's Liberation Army girds for a future of what it calls "intelligentized warfare."

As Russian President Vladimir Putin put it as early as 2017, "whoever becomes the leader in this sphere will become the ruler of the world."

But it's not only great powers piling in. Much further down the pecking order of global power, this new era is a battle-tested reality.

German Foreign Minister Heiko Maas: 'We have to forge international treaties on new weapons technologies'

German Foreign Minister Heiko Maas: 'We have to forge international treaties on new weapons technologies'Watershed war

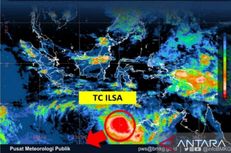

In late 2020, as the world was consumed by the pandemic, festering tensions in the Caucasus erupted into war.

It looked like a textbook regional conflict, with Azerbaijan and Armenia fighting over the disputed region of Nagorno-Karabakh. But for those paying attention, this was a watershed in warfare.

"The really important aspect of the conflict in Nagorno-Karabakh, in my view, was the use of these loitering munitions, so-called 'kamikaze drones' — these pretty autonomous systems," said Ulrike Franke, an expert on drone warfare at the European Council on Foreign Relations.

'Loitering munitions' saw action in the 2020 Nagorno-Karabakh war

'Loitering munitions' saw action in the 2020 Nagorno-Karabakh warBombs that loiter in the air

Advanced loitering munitions models are capable of a high degree of autonomy. Once launched, they fly to a defined target area, where they "loiter," scanning for targets — typically air defense systems.

Once they detect a target, they fly into it, destroying it on impact with an onboard payload of explosives; hence the nickname "kamikaze drones."

"They also had been used in some way or form before — but here, they really showed their usefulness," Franke explained. "It was shown how difficult it is to fight against these systems."

Also read: Gas Explosion Injuries Two Foreigners in Bali, Indonesia

Research by the Center for Strategic and International Studies showed that Azerbaijan had a massive edge in loitering munitions, with more than 200 units of four sophisticated Israeli designs. Armenia had a single domestic model at its disposal.

Other militaries took note.

"Since the conflict, you could definitely see a certain uptick in interest in loitering munitions," said Franke. "We have seen more armed forces around the world acquiring or wanting to acquire these loitering munitions."

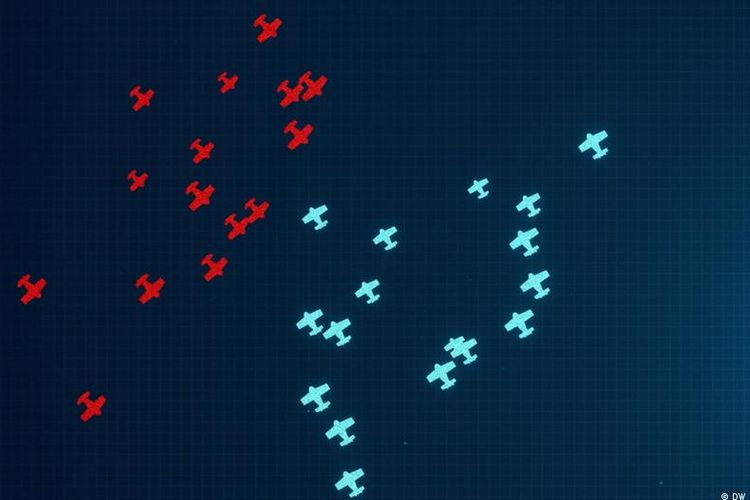

AI-driven swarm technology will soon hit the battlefield

AI-driven swarm technology will soon hit the battlefieldDrone swarms and 'flash wars'

This is just the beginning. Looking ahead, AI-driven technologies such as swarming will come into military use — enabling many drones to operate together as a lethal whole.

"You could take out an air defense system, for example," said Martijn Rasser of the Center for a New American Security, a think tank based in Washington, D.C.

"You throw so much mass at it and so many numbers that the system is overwhelmed. This, of course, has a lot of tactical benefits on a battlefield," he told DW. "No surprise, a lot of countries are very interested in pursuing these types of capabilities."

The scale and speed of swarming open up the prospect of military clashes so rapid and complex that humans cannot follow them, further fueling an arms race dynamic.

As Ulrike Franke explained: "Some actors may be forced to adopt a certain level of autonomy, at least defensively, because human beings would not be able to deal with autonomous attacks as fast."

This critical factor of speed could even lead to wars that erupt out of nowhere, with autonomous systems reacting to each other in a spiral of escalation. "In the literature we call these 'flash wars'," Franke said, "an accidental military conflict that you didn't want."

Experts warn that AI-driven systems could lead to 'flash wars' erupting beyond human control

Experts warn that AI-driven systems could lead to 'flash wars' erupting beyond human controlA move to 'stop killer robots'

Bonnie Docherty has made it her mission to prevent such a future. A Harvard Law School lecturer, she is an architect of the Campaign to Stop Killer Robots, an alliance of nongovernmental organizations demanding a global treaty to ban lethal autonomous weapons.

"The overarching obligation of the treaty should be to maintain meaningful human control over the use of force," Docherty told DW.

"It should be a treaty that governs all weapons operating with autonomy that choose targets and fire on them based on sensor's inputs rather than human inputs."

Also read: Indonesia Police Bust Reveals Use of Drones in Drug Smuggling Attempt

The campaign has been focused on talks in Geneva under the umbrella of the UN Convention on Certain Conventional Weapons, which seeks to control weapons deemed to cause unjustifiable suffering.

It has been slow going. The process has yielded a set of "guiding principles," including that autonomous weapons be subject to human rights law, and that humans have ultimate responsibility for their use. But these simply form a basis for more discussions.

Docherty fears that the consensus-bound Geneva process may be thwarted by powers that have no interest in a treaty.

"Russia has been particularly vehement in its objections," Docherty said. But it's not alone. "Some of the other states developing autonomous weapon systems such as Israel, the US, the United Kingdom and others have certainly been unsupportive of a new treaty."

Time for a rethink?

Docherty is calling for a new approach if the next round of Geneva talks due later this year makes no progress.

She has proposed "an independent process, guided by states that actually are serious about this issue and willing to develop strong standards to regulate these weapon systems."

But many are wary of this idea. Germany's foreign minister has been a vocal proponent of a ban, but he does not support the Campaign to Stop Killer Robots.

Also read: Afghanistan to Discuss Fate of Foreign IS Prisoners With Their Countries

"We don't reject it in substance — we're just saying that we want others to be included," Heiko Maas told DW. "Military powers that are technologically in a position not just to develop autonomous weapons but also to use them."

Maas does agree that a treaty must be the ultimate goal. "Just like we managed to do with nuclear weapons over many decades, we have to forge international treaties on new weapons technologies," he said.

"They need to make clear that we agree that some developments that are technically possible are not acceptable and must be prohibited globally."

Germany's Heiko Maas in DW interview: 'We're moving toward a situation with cyber or autonomous weapons where everyone can do as they please'

Germany's Heiko Maas in DW interview: 'We're moving toward a situation with cyber or autonomous weapons where everyone can do as they please'What next?

But for now, there is no consensus. For Franke, the best the world can hope for may be norms around how technologies are used.

"You agree, for example, to use certain capabilities only in a defensive way, or only against machines rather than humans, or only in certain contexts," she said.=

Even this will be a challenge. "Agreeing to that and then implementing that is just much harder than some of the old arms control agreements," she said.

And while diplomats tiptoe around these hurdles, the technology marches on.

"The world must take an interest in the fact that we're moving toward a situation with cyber or autonomous weapons where everyone can do as they please," said Maas. "We don't want that."

For more, watch the full documentary Future Wars on YouTube.